GSoC with mlpack

Weekly updates of the project - Transformer and BERT in mlpack

Author: mrityunjay-tripathi

Transformer and BERT in mlpack

Fri, June 05

Week 2 (25 May - 31 May)

-

Implementation of Multihead Attention layer. (#2375)

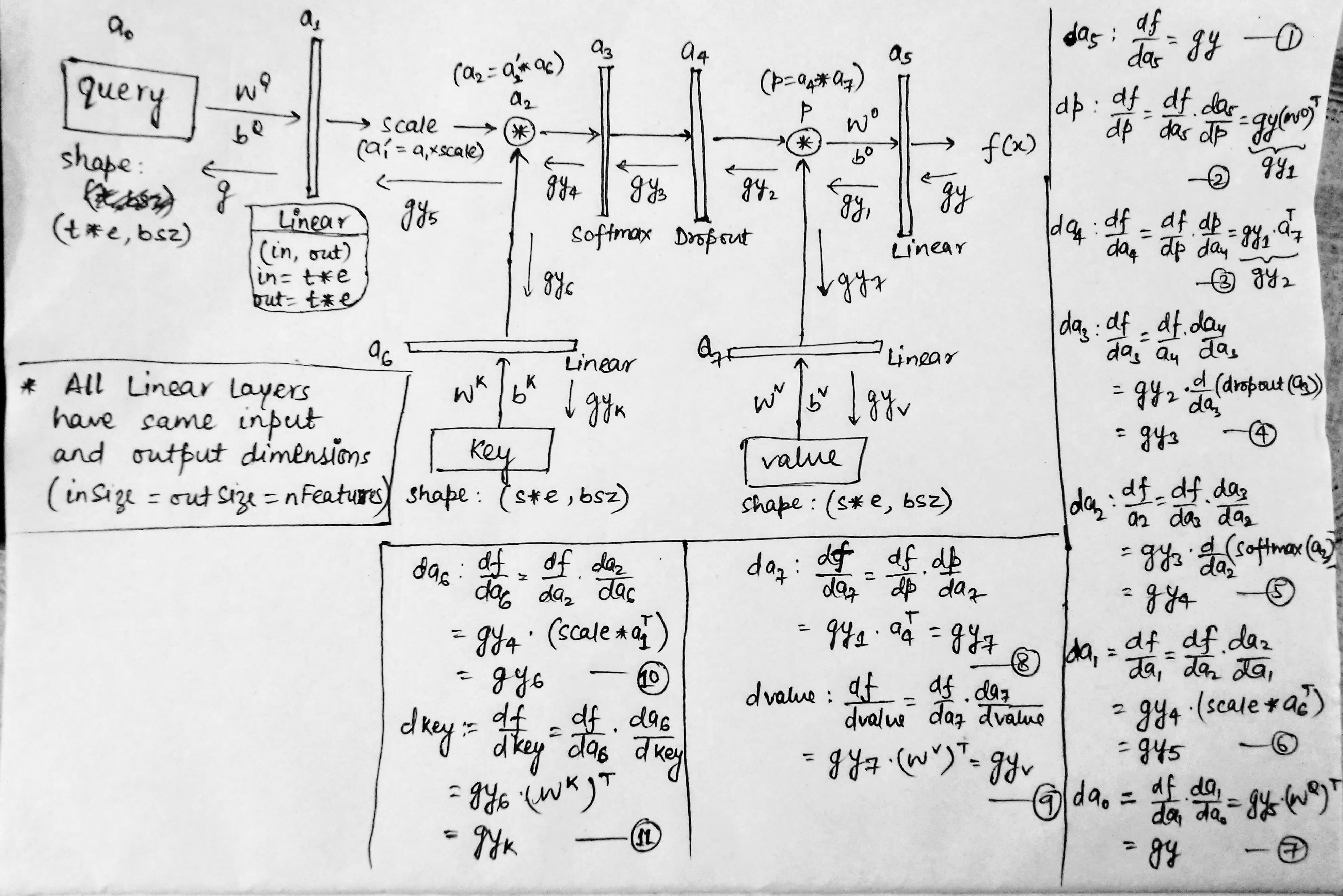

This week wasn't much about implementation, rather design and mathematics behind Multihead Attention. I tried to draw the outline for backward propagation and gradient calculation.

And Thanks to Mikhail that I could design proper layout for the MultiheadAttention class. I spent most of the time this week reading the paper "Attention Is All You Need" more deeply than before. Though I have some doubts, I am trying to resolve them as soon as possible. (Sounds like "Multihead At Tension" 😂)