GSoC with mlpack

Weekly updates of the project - Transformer and BERT in mlpack

Author: mrityunjay-tripathi

Transformer and BERT in mlpack

Mon, July 06

Week 5 (29 June - 05 July)

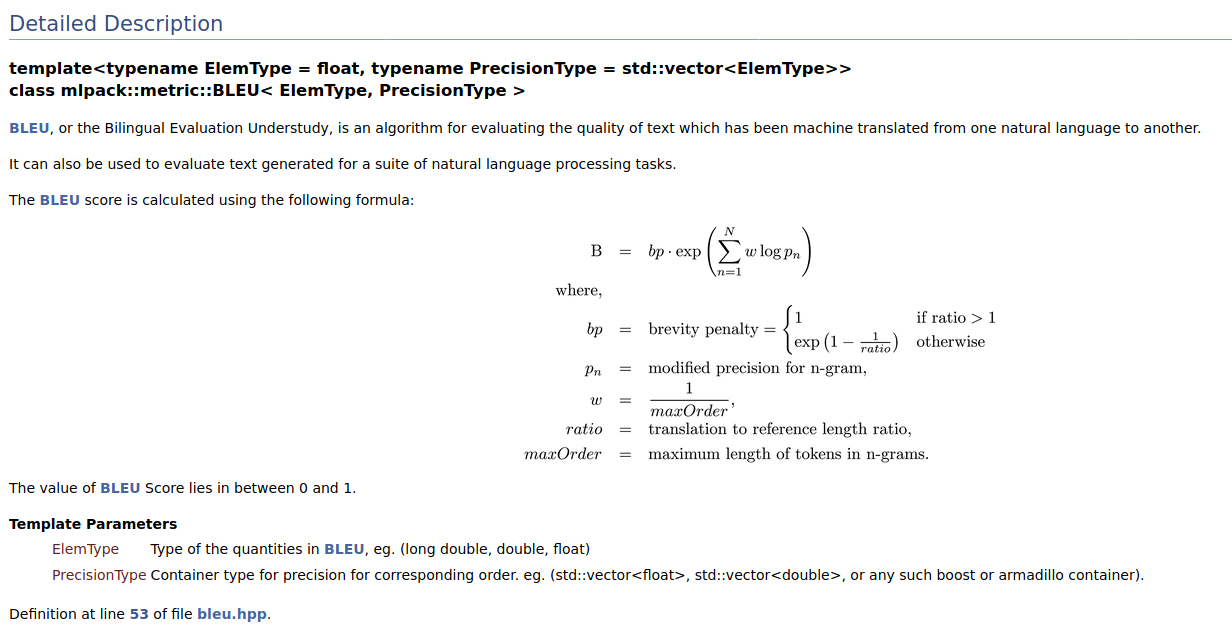

Hello, everyone! This week was I was able to implement single-head attention as promised previous week. Multi-head attention is a parallelized form of scaled dot product attention (we also call it single-head attention) along with some linear transformations. I was confused as how to implement multi-head attention, so I changed the approach and tried single-head attention. Now, there are various ways I can go after multi-head attention which I will be carrying this week. I am really really delighted that things are working now. The other thing that I done this week is adding documentation for BLEU Metric and this was really fun. I think, it's ready to be merged now.

Part of the documentation of BLEU Metric.

I was mostly ignorant about documentation untill now but surely there will be much more detailed documentation for every pull request from now onwards. Also, my first feature request in mlpack (Poisson NLL Loss) got merged this week 🎉🎉🎉😁.

For next week:

I will try to add documentation for scaled dot product attention.

Get the multi-head attention layer working as it should work. I hope there is no more next week for this thing 😅.

Test the addition on Transformer Encoder and Decoder.

See you next time. Be Healthy! Be Safe!