GSoC with mlpack

Weekly updates of the project - Transformer and BERT in mlpack

Author: mrityunjay-tripathi

Transformer and BERT in mlpack

Sun, June 07

Week 1 (01 June - 07 June)

The actual coding period starts. The experience I've gained while being with mlpack till now is invaluable. Till now most of the pull requests I made were to get familiar with the codebase, but now I expect to carry on the project. I started off this week by implementing the Transformer Encoder an Decoder in mlpack/models repository. This pull request aims to implement Encoder and Decoder portion of the Transformer Model.

The architecture for the Transformer Encoder is -

Image by Jay Alammar

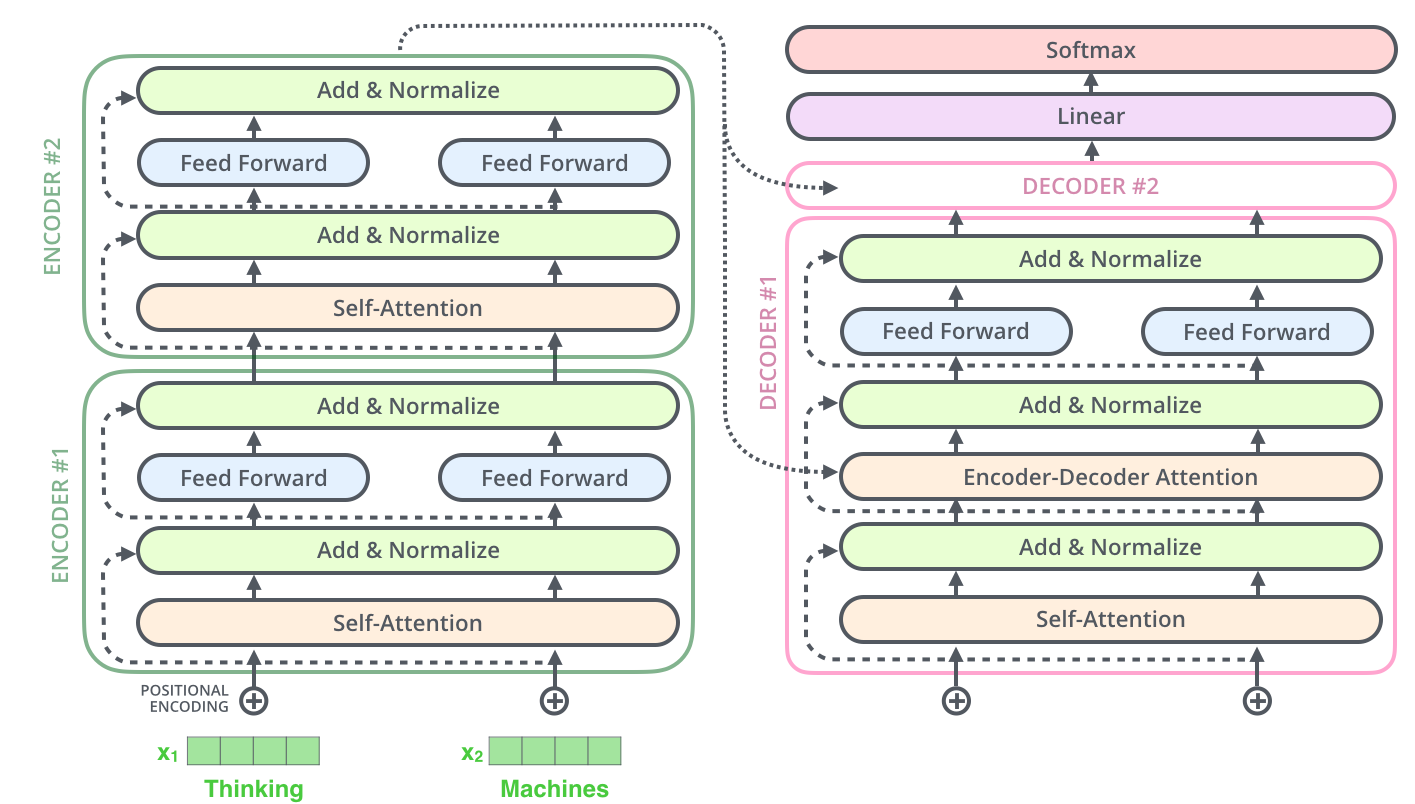

The architecture for the combined Transformer Encoder and Decoder is -

Image by Jay Alammar

The next thing I did was defined Policy class for different types of attention mechanisms as suggested by Mikhail. The next week I plan to focus on multihead attention to have efficient and proper API. You can help me with the reviews and/or ideas here.

See you soon. Be Healthy! Be Safe!